ChatGPT Sends Millions to Verified Election News, Blocks 250,000 Deepfake Attempts

AI

Zaker Adham

09 November 2024

22 August 2024

|

Paikan Begzad

Summary

Summary

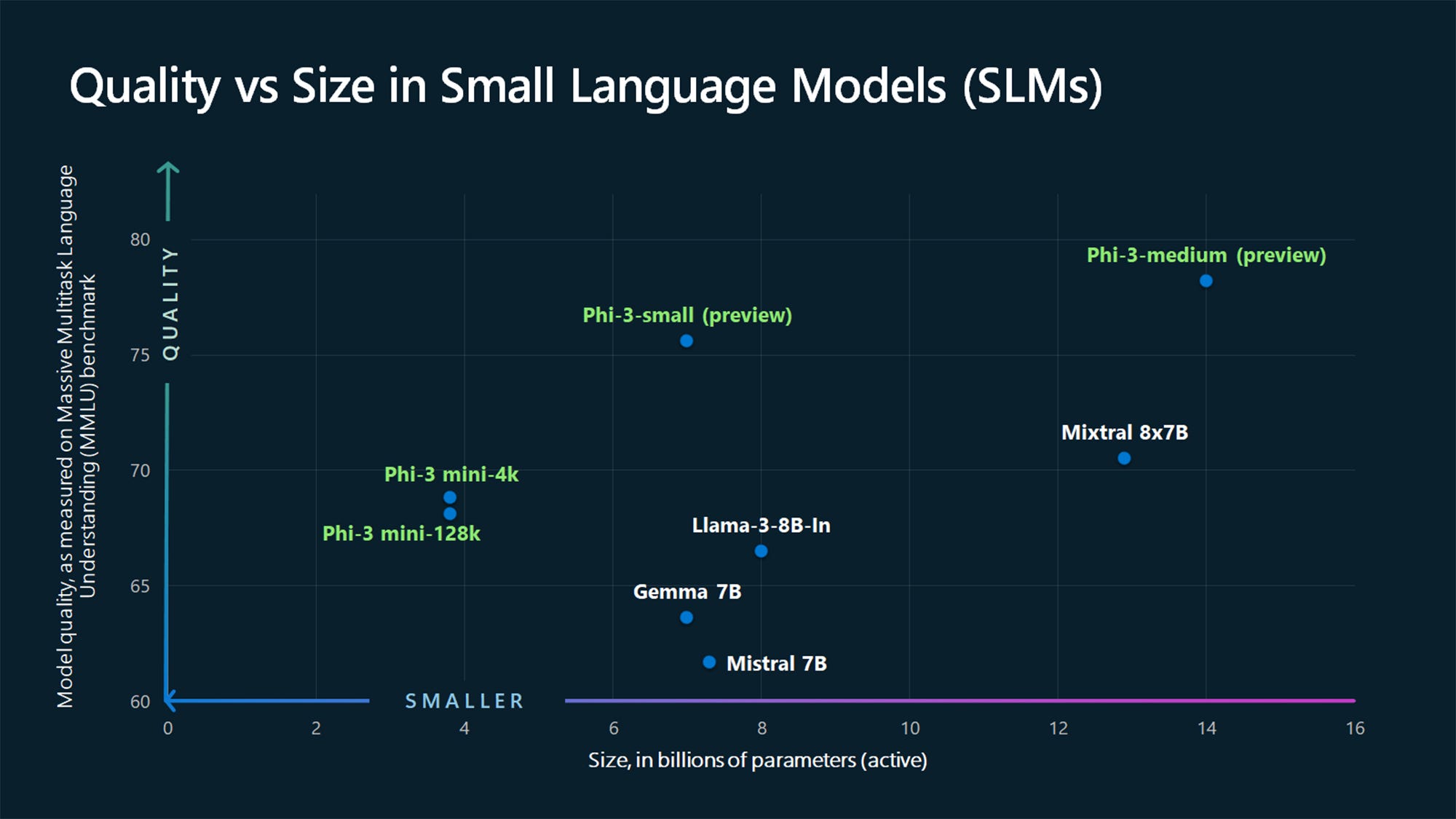

Microsoft has unveiled its latest collection of small language models (SLMs), known as the Phi-3.5 series. This new lineup includes three innovative AI models: Phi-3.5-mini-instruct, Phi-3.5-Mixture of Experts (MoE)-instruct, and Phi-3.5-vision-instruct. These models are designed to offer a lightweight yet powerful alternative to competitors like Google’s Gemini 1.5 Flash, Meta’s Llama 3.1, and even OpenAI’s GPT-4o in certain aspects.

The Phi-3.5-mini-instruct model features 3.82 billion parameters, while the Phi-3.5-MoE-instruct is equipped with a remarkable 41.9 billion parameters, utilizing only 6.6 billion active parameters. The Phi-3.5-vision-instruct model, focused on visual tasks, boasts 4.15 billion parameters. These parameters reflect the depth of knowledge and problem-solving abilities embedded within each model.

A standout feature of all three models in the Phi-3.5 series is their support for a 128k-token context window, which enables the processing of extensive amounts of data, including text, images, audio, and video. Microsoft has trained these models on high-quality, reasoning-dense datasets over varying periods, ensuring their effectiveness in diverse applications.

The Phi-3.5-mini-instruct model excels in quick reasoning tasks like code generation and solving mathematical problems. The Phi-3.5-MoE-instruct, composed of multiple specialized models, is designed to handle complex, multi-language AI tasks. The Phi-3.5-vision-instruct model stands out for its ability to process both text and images, making it ideal for summarizing videos, analyzing charts, and performing other visual tasks.

Developers can easily access and integrate these models into their platforms, as Microsoft has released them under an open-source license. The Phi-3.5 series is available for download and customization through Hugging Face, allowing for commercial use and modifications without restrictions.

AI

Zaker Adham

09 November 2024

AI

Zaker Adham

09 November 2024

AI

Zaker Adham

07 November 2024

AI

Zaker Adham

06 November 2024