AI

Embracing Explainable AI in Banking: A Path to Greater Transparency

22 August 2024

|

Zaker Adham

As the banking and financial sectors continue to generate vast amounts of data, the application of Artificial Intelligence (AI) across various operations—ranging from front-office to back-office activities—is steadily increasing.

AI's role in fraud detection, risk management, predictive analytics, and process automation is becoming essential. However, concerns about the alignment of AI models with regulatory standards and the risks of biases in Machine Learning (ML) algorithms are growing, particularly when data quality issues or a lack of business context affect the models.

To address these concerns, global regulators are now demanding that financial institutions implement transparent AI models that can be easily analyzed and understood. In this context, Explainable AI (XAI) is emerging as a vital component for integrating AI into highly regulated industries like banking and finance.

The Three Pillars of Explainable AI

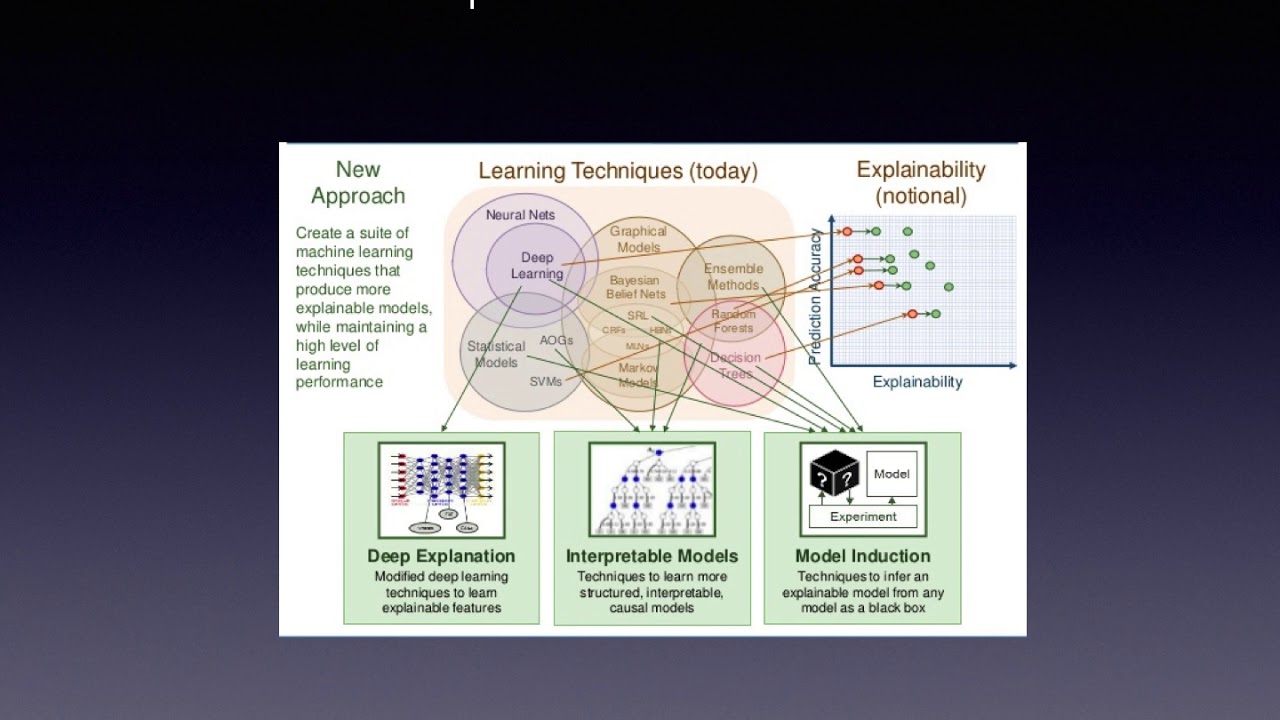

According to the U.S. Defense Advanced Research Projects Agency (DARPA), XAI can be realized through three main approaches:

- Deep Explanation: This approach involves models that produce outputs that are not easily understandable by non-experts, posing challenges for transparency.

- Interpretable Models: These models are designed to be more structured and understandable, utilizing techniques such as statistical models, graphical models, or Random Forests. However, even transparent models like Logistic Regression or Decision Trees can become "black boxes" when dealing with a large number of features.

- Model Induction: This approach focuses on adjusting input weights and measures to evaluate their impact on outputs, drawing logical inferences in the process. Model induction techniques also include surrogate or local modeling to enhance interpretability.

The Role of Rules and Fuzzy Logic in XAI

Explainability is not only crucial for regulatory compliance but also for improving customer relations. One method for enhancing explainability is through the use of IF-Then rules, which can make AI models more transparent. However, traditional crisp IF-Then rules can struggle with variables that lack clear boundaries, such as income or age.

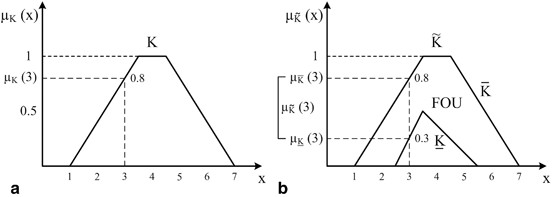

A promising alternative is the use of Fuzzy Logic Systems (FLS), which apply linguistic labels to variables, enabling a more nuanced understanding of AI model outputs. For instance, using Type-1 fuzzy sets, a value like $150,000 could belong to both "Low" and "High" income categories, reflecting the ambiguity inherent in human perception. Type-2 fuzzy sets take this a step further by embedding all Type-1 sets within a Footprint of Uncertainty (FoU), providing an even more flexible approach to handling imprecise data.

Although FLS is not yet widely recognized as an XAI technique, it has shown promise in bridging the gap between data-driven insights and human expertise. For example, Logical Glue has developed systems that generate FLS with concise IF-Then rules and small rule bases, achieving prediction accuracies comparable to black-box models.

The Future of Explainable AI in Banking

The evolution of FLS and other XAI techniques is paving the way for more transparent, fair, and safe AI models in banking. By integrating expert knowledge with data-driven insights, these models not only comply with regulatory standards but also foster greater trust among users. As AI continues to transform the banking sector, the adoption of explainable models will be crucial for ensuring that AI benefits everyone.