AI

Qwen2-Math: A New Era for AI Math Experts

10 August 2024

|

Zaker Adham

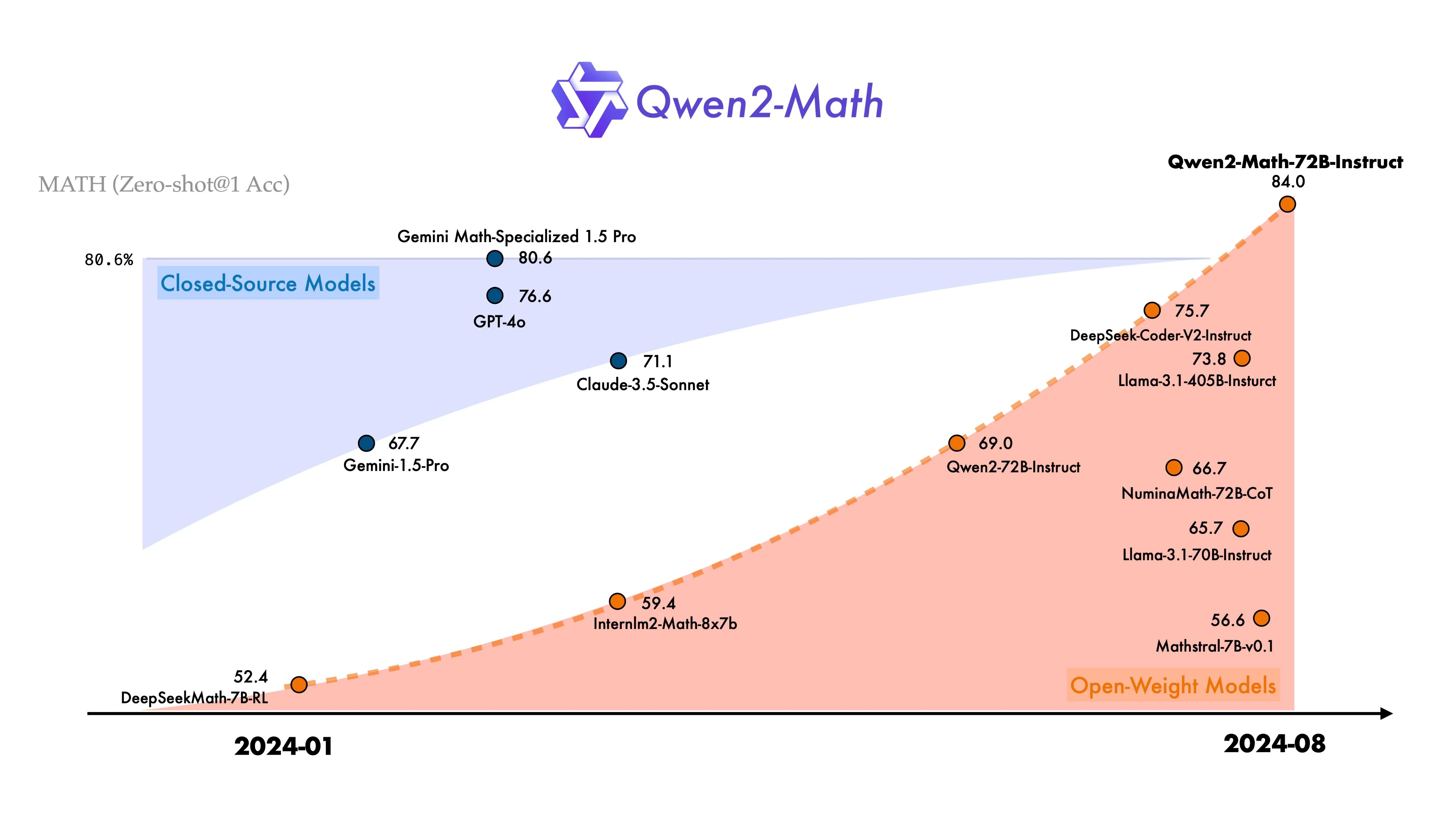

Alibaba Cloud's Qwen team has introduced Qwen2-Math, a new series of large language models designed to solve complex mathematical problems.

These advanced models, built on the Qwen2 foundation, excel in arithmetic and mathematical challenges, surpassing previous industry leaders.

The Qwen team developed Qwen2-Math using a comprehensive Mathematics-specific Corpus, which includes high-quality resources such as web texts, books, code, exam questions, and synthetic data generated by Qwen2.

Evaluations on both English and Chinese mathematical benchmarks, including GSM8K, Math, MMLU-STEM, CMATH, and GaoKao Math, showcased Qwen2-Math's exceptional capabilities. The flagship model, Qwen2-Math-72B-Instruct, outperformed proprietary models like GPT-4o and Claude 3.5 in various mathematical tasks. "Qwen2-Math-Instruct achieves the best performance among models of the same size, with RM@8 outperforming Maj@8, particularly in the 1.5B and 7B models," noted the Qwen team.

This outstanding performance is due to the effective implementation of a math-specific reward model during development.

Qwen2-Math also demonstrated impressive results in challenging mathematical competitions such as the American Invitational Mathematics Examination (AIME) 2024 and the American Mathematics Contest (AMC) 2023.

To ensure the model's integrity and prevent contamination, the Qwen team employed robust decontamination methods during both pre-training and post-training phases. This involved removing duplicate samples and identifying overlaps with test sets to maintain accuracy and reliability.

Looking ahead, the Qwen team plans to expand Qwen2-Math's capabilities beyond English, with bilingual and multilingual models in development. This commitment aims to make advanced mathematical problem-solving accessible to a global audience.

"We will continue to enhance our models' ability to solve complex and challenging mathematical problems," affirmed the Qwen team.